It has been five years since I posted BackupPC and Bare Metal Restore of Windows XP, which has been surprisingly popular. However, Windows XP has been out of official support for quite a while now, and the same techniques, although they can be made to function with newer versions of Windows, are no longer ideal. On the plus side, there are better options for bare metal recovery now.

First, it’s worth mentioning that BackupPC is a system designed to back up files, not images, so recovery is going to be slightly imperfect. While the files themselves can be completely recovered, the ability to recover file permissions is limited, so that it may not be suitable for a server with complex file permissions or where security of the data is paramount. File based backups are particularly good for the case where files are lost or damaged (often through user error) but not well suited to complete system recovery — and catastrophic media failure is often an opportunity to clean out the debris that tends to accumulate over time with computer use.

So, while the ideal vehicle may have been a different backup method, such as an image backup, it’s still quite possible to recover with just the files, as I outline here. To complete this task, you’ll need a little more than twice as much space as the system to be recovered — it can be in two different places, and that isn’t a bad idea for performance — installation media for the system to be recovered, access to either HyperV or a VirtualBox virtual machine (VirtualBox is free), and the drivers necessary for the system to be recovered to reach the storage. For example, if it’s on a network share, network drivers may be necessary (even if they’re built in to Windows 7.)

Step 1: Build a local tar file using BackupPC_tarCreate

This is probably familar as being the exact same step one as before; I note that using gzip to save space or I/O appears to slow things down. At any rate, this is best accomplished from the command line, as the backuppc user:

BackupPC_tarCreate -t -n -1 -h borkstation -s C / > borkstation.tar

“borkstation” is the name of the host to recover, “-n -1″ means the latest backup, and you’ll obviously need to have enough space where the tar file is going to store the entire backup, which will not be compressed. Note the space between the “C”, which represents the share to restore, and the “/”, which represents the directory to restore.

Step 2: Prepare base media

The point of this step is to get the drives partitioned the way you want, and as before, it will just be wiped out, so it doesn’t make sense to worry about much except the partition scheme and whether or not it’s bootable, so a base installation will do. You’ll want it to be able to access the network (or whatever media is being used) as well.

So, in a nutshell, you install an operating system similar to the one you’re recovering. It doesn’t need to be identical, so you can, for example, use a 32-bit version to recover a 64-bit version, or what have you. You just need a basic, running, system.

Step 3: Back up the system

Yes, the idea is to create a system image of the base system you just installed. The data will be discarded. This can be done from Control Panel->System and Security->Backup and Restore->Create a System Image. This image either needs to be placed somewhere the VM can get to it, or moved there.

Step 4: Mount and erase the drive image

The backup image created in Step 3 is a directory called “WindowsImageBackup” that contains a folder for the PC, along with a lot of metadata and one or more VHD files. These VHD files are virtual hard drives that can be directly mounted in supported VMs. You’ll need a VM image that’s capable of understanding the filesystem, but it doesn’t need to match the operating system being recovered. For VirtualBox, the VHD file can be added to the Storage tree anywhere; it can be left in place, but it will grow to the size of the total of all files to be recovered, so plan accordingly.

For Windows 7, it’s probably easiest to clean it out by right-clicking on the drive (that maps to the VHD) and performing a quick format. While it’s possible to leave the operating system and other files in place, this usually causes all kinds of permission issues recovering matching files and can result in a corrupted image, so it’s simplest just to clean it out.

Step 5: Extract backup files to the drive image

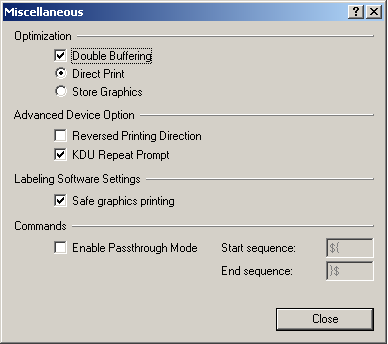

This step requires that Cygwin be installed on the VM. A stock install of Cygwin is all that’s really needed, but there’s an important change to make to fstab:

none /cygdrive cygdrive binary,noacl,posix=0,user 0 0

This is necessary because tar’s attempt to restore acl’s to directories doesn’t quite match the way Windows expects things to be done, and without adding “noacl” to fstab as above, tar will create files and directories which it doesn’t have access to, and will experience failures trying to restore subdirectories.

After making the change and completely closing all Cygwin windows, open a Cygwin window, navigate to the destination drive, and run tar on the archive created in step one. (A shared drive will make it accessible to the VM.)

tar -xvf /cygdrive/z/borkstation.tar

“Z” in this case is the Windows drive which is mapped to the location of the archive; the path simply needs to point to the correct file. This part takes a while, and since the virtual image needs to expand on its host disk, there will be a lot of I/O. It helps to have the source archive and the destination VHD on different media.

If permission restrictions aren’t important to you, now’s the time to right-click the destination drive within the VM, and grant full rights to “Authenticated Users.” This should be sufficient to prevent any lingering permission side-effects.

At this point, the VM should be shut down so the VHD is released. (It’s a bad idea for more than one owner to access a VHD at the same time.)

Step 6: Clean up

Aside from the files themselves, there are a number of things stored outside the files that need to be cleaned up. The “hidden” and “system” attributes, for example, have not been preserved. For most files, this doesn’t matter much, but Windows has “desktop.ini” files sprinkled all over the filesystem that become visible and useless unless corrected. This is easy to do from the command line:

cd \

attrib +h +s /s desktop.ini

The mapping of “read only” attributes is unfortunately, somewhat imperfect, and the “read only” bit in Windows may be set for directories for which the backup user did not have full access. Notably, this can cause the Event service not to function properly, so its directories need to have their read-only bit unset:

attrib -r /s windows/system32/logfiles

attrib -r /s windows/system32/rtbackup

Though it doesn’t seem to cause issues to just unset the read-only bit on the entire system:

attrib -r /s *.*

One ugly thing that’s stored in the NTFS system but not in any files is the short names that are generated to provide an 8.3 file name for files with longer names in Windows. These are generated on-the-fly as directories or files are added, which means that the short file names generated as files are recovered may not match short file names as they were originally generated. For the most part, short file names aren’t used, but they may appear in the registry as references for COM objects or DLL’s, and the system won’t function properly if it cannot locate these files.

The simplest way to track these down is to load the registry editor (“regedit,”) select a key, then use File->Load Hive to load the recovered registry from windows\system32\config on the drive image. Then, searching for “~2” “~3” and so on will yield any potential conflicts between generated short names. While the registry can simply be updated, it’s usually easier to update the generated short name, which can be done from the command line:

fsutil file setshortname "Long File Name" shortn~1

Note that switching the short names of two files or directories takes three steps, but since short names can be anything at all, this is relatively straightforward.

The last piece to do is file ownership/permissions and ACL’s. Since none of this is preserved in a file backup, I find it easiest to right click on the recovered image and give full control to “authenticated users,” to prevent problems accessing files. Your mileage and security concerns may vary.

Step 7: Recover the image

The simplest way to do this is to boot the system to be recovered to the vanilla operating system installed to produce the image, and use the same Control Panel to select Recovery, then Advanced Recovery Methods, then “Use a system image you created earlier to recover your computer.”

This can also be accomplished from installation media, which is handy if anything goes wrong. Rather than installing a new operating system, selecting “Repair your computer,” then moving on to Advanced Recovery Methods, should also be able to restore the image. If you use this method, it may be necessary to manually load network drivers before being able to access shares.

Note: after the image is restored, the system may complain about not being able to load drivers, and claim that the restore failed. I’m not sure why this occurs, but it doesn’t seem to matter. Reboot, and the system should be mostly back-to-normal.